Technical FAQs

Frequently Asked Questions by Unified Forecast System (UFS) Community Users

This page is dedicated to Technical FAQs, if you are interested in General FAQs please click here.

UFS code is portable to Linux and Mac operating systems that use Intel or GNU compilers. The code has been tested on a variety of platforms widely used by atmospheric scientists, including NOAA Research & Development HPC Systems (e.g., Hera, Jet, and Gaea); the National Center for Atmospheric Research (NCAR) system, Derecho; and various Mac laptops. EPIC also provides support for running UFS applications in Singularity/Apptainer containers. These containers come with pre-built UFS code and dependencies and can be used on any platform that supports Singularity/Apptainer. Additionally, EPIC supports the use of the UFS on major commercial Cloud Service Providers (CSPs) including Google Cloud, Amazon Web Services (AWS), and Microsoft Azure. Limited support is also available for other non-commercial high-performance computing platforms.

Currently, there are four levels of supported platforms. On preconfigured (Level 1) platforms, the UFS code is expected to build and run out-of-the-box. On configurable (Level 2) platforms, the prerequisite software libraries are expected to install successfully, but they are not available in a central location. Applications and models are expected to build and run without issue once the prerequisite libraries have been built. Limited-test (Level 3) and build-only (Level 4) platforms are platforms where developers have built the code but little or no pre-release testing has been conducted, respectively. View a complete description of the levels of support for more information. Individual applications and components support different subsets of machines. However, the UFS Weather Model provides regression testing support for a standard set of systems.

Before running UFS applications, models, or components, users should determine which of the four levels of support is applicable to their system. Each application, model, and component has its own official list of supported systems, although there is significant overlap between them:

- UFS Weather Model (WM) Supported Platforms and Compilers for Regression Testing

- UFS Short-Range Weather (SRW) App Supported Platforms and Compilers

- UFS Land Data Assimilation (DA) System Supported Platforms and Compilers

- Unified Post Processor (UPP) Supported Systems

- Hurricane Analysis and Forecast System (HAFS) Supported Platforms

Generally, Level 1 & 2 systems are restricted to those with access through NOAA and its affiliates. These systems are named (e.g., Hera, Orion, Derecho), and users will generally know if they have access to one of these systems. Level 3 & 4 systems include certain personal computers or non-NOAA-affiliated HPC systems. Users should look at the list of supported systems for the application, model, or component they want to run and see where their system is listed. If their system is not listed, then it is currently unsupported, although EPIC will still assist users as much as possible with these systems.

Currently, EPIC provides support primarily for Level 1 systems, including Hera, Jet, Gaea, Orion/Hercules, and Derecho. However, EPIC teams have worked with users on the cloud and on other HPC systems, such as S4 and Frontera, to help them run the UFS. The program makes a good faith effort to work with users on any platform (both cloud and on-premises) and has had good success with many but cannot guarantee results.

Currently, the EPIC program supports the following applications:

EPIC also supports the UFS Weather Model (WM), which consists of several individual modeling components (e.g., atmosphere, ocean, wave, sea ice) assembled under one umbrella repository. EPIC works with code managers from the component repositories to facilitate the pull request (PR) process, but the program primarily manages the top-level repository.

The EPIC program supports the following components:

EPIC also collaborates on the UFS Unified Workflow (UW) team to bring UW Tools to the UFS community. uwtools is a modern, open-source Python package that helps automate common tasks needed for many standard numerical weather prediction workflows. It also provides drivers to automate the configuration and execution of UFS components, providing flexibility, interoperability, and usability to various UFS Applications.

UFS code is provided free-of-charge under a variety of open-source licenses (see, e.g., the UFS Weather Model license). The computing power required to run certain applications may require access to a high-performance computing (HPC) system or a cloud-based system. Costs to run the code are platform- and configuration-dependent. Users who have access to an HPC system are likely funded to use that system, but it is best to consult a supervisor and/or system administrator for any specific limitations. Users running on cloud-based platforms should consult the pricing from their cloud service provider (CSP). In general, UFS configurations using higher resolution domains (grids) and/or data assimilation will require relatively more compute resources and will therefore incur greater expense. However, most UFS applications, models, and components have simple, lower cost configurations available.

UFS Code consists of several models, applications, and components, each with its own GitHub repository and accompanying set of documentation. UFS code is publicly available on GitHub.

It is recommended that new users start with one of the latest application releases:

UFS Short-Range Weather (SRW) App v2.2.0

Release Date: 10/31/2023

Release Description: The SRW App v2.2.0 is an update to the v2.1.0 release from November 2022 and reflects a number of changes currently available in the SRW App develop branch. The Application is designed for short-range (up to two days) regional forecasts located anywhere on the globe. It includes a prognostic atmospheric model, pre- and post-processing, and a community workflow for running the system end-to-end. These components are documented within this User’s Guide and supported through GitHub Discussions. Key feature updates for this release include the addition of new supported platforms (i.e., Derecho, Hercules, Gaea C5), the transition to spack-stack modulefiles for most supported platforms to align with the UFS WM shift to spack-stack, and the addition of the supportedFV3_RAPphysics suite and support for theRRFS_NA_13kmpredefined grid. A comprehensive list of updates is documented in the GitHub release.

Documentation: SRW App User’s Guide v2.2.0

UFS Land Data Assimilation (DA) System v2.0.0

Release Date: 2024-10-30

Release Description: This release is an update to the version 1.2.0 release from December 2023 and reflects a number of changes currently available in the land-DA_workflow development branch. To improve the internal structure and maintainability of the UFS land-DA_workflow repository, developers have refactored the workflow to adhere more closely to the National Center for Environmental Prediction’s (NCEP) Central Operations (NCO) Implementation Standards. Additionally, developers have incorporated the Unified Workflow (UW) Tools open-source Python package to improve Rocoto workflow generation and automate common Land DA tasks needed for standard numerical weather prediction (NWP) systems. A comprehensive list of updates is documented in the GitHub release.

In general, applications contain a full set of pre- and post-processing utilities packaged with the UFS Weather Model. They also include documentation for users to get started with the application. The repository wiki often contains additional information, such as code contribution requirements.

Other repositories and documentation for UFS Code can be found at the following locations:

The ufs-community GitHub Discussions page is a great place to post general questions about the UFS. When questions are specific to a particular application, model, or component, it is best to post questions directly to those repositories. Repositories with EPIC-supported GitHub Discussions include:

- UFS Weather Model (WM)

- UFS Short-Range Weather (SRW) Application

- UFS Land Data Assimilation System

- Unified Post Processor (UPP)

When a repository does not include an EPIC-supported Discussions feature, users may post their questions on the ufs-community GitHub Discussions page instead.

Users can also check out the training resources available on the EPIC website at: https://epic.noaa.gov/training-resources/.

UFS models and applications necessitate a collection of software libraries for compilation. These prerequisite libraries are conveniently provided in a unified distribution package known as spack-stack.

The software stack managed by spack-stack comprises two distinct categories of libraries:

- Bundled Libraries: These are the National Centers for Environmental Prediction Libraries (NCEPLIBS) and are specifically developed for use with NOAA’s operational weather model

- Third-Party Libraries: These software packages originate external to the UFS Weather Model and are general-purpose packages used by other models within the UFS community

Note: As of August 2023, the UFS Weather Model transitioned from using HPC-Stack to spack-stack. Spack-stack is the currently supported installation method for the UFS prerequisite software libraries. Users are strongly encouraged to consult the spack-stack documentation and direct any installation or usage inquiries to the spack-stack Q&A forum.

The CCPP team updated its technical documentation for the CCPP v7.0.0 release, which is the most recent standalone CCPP release (September 2024).

Users may also view technical documentation corresponding to the Short-Range Weather (SRW) Application v2.2.0 release (October 2023).

The CCPP team continues to add new developments to the main development branch of its repositories, and these are captured in the latest CCPP technical documentation. Users should know that this documentation may have gaps or errors, since the repository is in active development.

The CCPP team updated its scientific documentation for the CCPP v7.0.0 release, which is the most recent standalone CCPP release (September 2024).

Users may also view scientific documentation corresponding to the Short-Range Weather (SRW) Application v2.2.0 release (October 2023).

The CCPP team continues to add new developments to the main development branch of its repositories. The HEAD of the CCPP repositories is therefore slightly ahead of the scientific documentation.

Each UFS repository maintains its own documentation and/or wiki. Additionally, UFS models and applications use a variety of components, such as pre- and post-processing utilities and physics suites. These components also maintain their own repository, documentation, and (if applicable) wiki page. Users can often view different versions of documentation by clicking on the caret symbol at the bottom left or right of any documentation hosted on Read the Docs.

![]()

Users may find the following links helpful as they explore UFS Code:

- UFS_UTILS Preprocessing Utilities

- Unified Post Processor (UPP)

- UPP User’s Guide (technical documentation)

- UPP Scientific Documentation

- Stochastic Physics Documentation

- FV3 Dynamical Core

- Coupled Models:

- Global Workflow wiki

- Community Data Models for Earth predictive Systems (CDEPS): CDEPS Documentation

- Community Mediator for Earth Prediction Systems (CMEPS): CMEPS Documentation

- Earth System Modeling Framework (ESMF) Manual

Other Helpful Links:

On URSA, system-level packages are maintained by NOAA RDHPCS within the /apps/spack-yyyy-mm directory structure. The directory naming convention (/apps/spack-yyyy-mm) signifies the installation location (/apps), the installation method (spack), and the installation date (yyyy-mm). These packages are distinct from the EPIC-maintained /contrib/spack-stack. Therefore, all requests for the installation of missing libraries within the /apps/spack-yyyy-mm environment (e.g., /apps/spack-2024-12) must be submitted via email to NOAA RDHPCS Help Desk

EPIC is responsible for the maintenance and management of the spack-stack installations, which are located under /contrib/spack-stack on URSA. Consequently, any requests to install missing libraries within the EPIC-supported /contrib/spack-stack environment must be directed via email to support.epic@noaa.gov.

UFS Weather Model (WM) Questions

The UFS Weather Model (WM) is constantly evolving, and new features are added at a rapid pace. Users can find those features in the develop branch, but documentation is not always available for the latest updates. The UFS WM is tagged frequently for public and operational releases. The ufs-land-da-v2.0.0 tag of the WM is the most recent public release of the UFS WM, which was released as part of the Land Data Assimilation (DA) System v2.0.0. This tag represents a snapshot of a continuously evolving system undergoing open development. Users may also find the ufs-srw-v2.2.0 tag of the WM useful; the UFS WM was released as part of the UFS Short-Range Weather (SRW) Application v2.2.0 using this tag.

Since the UFS WM contains a huge number of components (e.g., dynamical core, physics, ocean coupling, infrastructure), there have been a wide variety of updates since the most recent SRW App and Land DA release tags. Users can peruse the large number of pull requests in the UFS WM repository to gain a full understanding of the changes to the top-level system. For changes to specific components, users should consult the component repositories.

Previous releases of the UFS WM are available, but we recommend using the UFS WM within an application workflow (e.g., SRW App v2.2.0). Alternatively, users can run the develop branch code to check out the latest and greatest features! This code is constantly maintained via regression testing. Users can access information about previous releases of the UFS WM in the User’s Guide for each release:

Users will need to:

- Update input.nml by setting ldiag3d and qdiag3d to .true.

- Update the diag_table according to the instructions in the UFS WM documentation.

Although it may seem counterintuitive, the physics tendencies will be output in sfc*.nc files once the diag_table changes have been made. Even 3D fields will appear there.

Users may find the following GitHub Discussions on this topic informative:

To output a particular variable from FV3ATM, users must update the field section of the diag_table file, which specifies the fields to be output at run time. Only fields registered with register_diag_field(), which is an API in the FMS diag_manager routine, can be used in the diag_table. A line in the field section of the diag_table file contains eight variables with the following format:

"module_name", "field_name", "output_name", "file_name", "time_sampling", "reduction_method", "regional_section", packingThese variables are defined in Table 4.25 of the UFS WM documentation on the diag_table file.

For example, to output accumulated precipitation, the following line must appear in the diag_table file:

"gfs_phys", "totprcp_ave", "prate_ave", "fv3_history2d", "all", .false., "none", 2

Users may refer to diag_table examples in the UFS WM repository. These files are used to configure groups of regression tests. Additionally, information on available FV3ATM and Modular Ocean Model (MOM) 6 variables can be found in the diag_table options section of the UFS Weather Model documentation; diag_table variables from other components will be added as time permits.

View GitHub Discussion #2016 for the question that inspired this FAQ.

Information on diag_table variables has been added to the diag_table options section of the UFS Weather Model documentation. Currently, documentation is only available for variables coming from FV3ATM and the Modular Ocean Model (MOM) 6, but diag_table variables from other components will be added as time permits.

See ufs-community Discussion #33 for the question that inspired this FAQ.

Land DA System FAQs

The most common reason for the first few tasks to go DEAD is an improper path in the parm_xml.yaml configuration file. In particular, EXP_BASEDIR must be set to the directory above land-DA_workflow. For example, if land-DA_workflow resides at Users/Jane.Doe/landda/land-DA_workflow, then EXP_BASEDIR must be set to Users/Jane.Doe/landda. After correcting parm_xml.yaml, users will need to regenerate the workflow XML by running:

uw template render --input-file templates/template.land_analysis.yaml --values-file parm_xml.yaml --output-file land_analysis.yaml uw rocoto realize --input-file land_analysis.yaml --output-file land_analysis.xml

Then, rewind the DEAD tasks using rocotorewind (as described in the documentation), and use rocotorun/rocotostat to advance/check on the workflow (see Section 2.1.5.2 of the documentation for how to do this).

If the first few tasks run successfully, but future tasks go DEAD, users will need to check the experiment log files, located at $EXP_BASEDIR/ptmp/test/com/output/logs. It may also be useful to check that the JEDI directory and other paths and values are correct in parm_xml.yaml.

UFS Short-Range Weather (SRW) Application (App) Questions

At this time, there are ten physics suites available in the SRW App, five of which are fully supported. However, several additional physics schemes are available in the UFS Weather Model (WM) and can be enabled in the SRW App. Note that when users enable new physics schemes in the SRW App, they are using untested and unverified combinations of physics, which can lead to unexpected and/or poor results. It is recommended that users run experiments only with the supported physics suites and physics schemes unless they have an excellent understanding of how these physics schemes work and a specific research purpose in mind for making such changes.

To enable an additional physics scheme, such as the Yonsei University (YSU) planetary boundary layer (PBL) scheme, users may need to modify ufs-srweather-app/parm/FV3.input.yml. This is necessary when the namelist has a logical variable corresponding to the desired physics scheme. In this case, it should be set to True for the physics scheme they would like to use (e.g., do_ysu = True).

It may be necessary to disable another physics scheme, too. For example, when using the YSU PBL scheme, users should disable the default SATMEDMF PBL scheme (satmedmfvdifq) by setting the satmedmf variable to False in the FV3.input.yml file.

It may also be necessary to add or subtract interstitial schemes, so that the communication between schemes and between schemes and the host model is in order. For example, it is necessary that the connections between clouds and radiation are correctly established.

Regardless, users will need to modify the suite definition file (SDF) and recompile the code. For example, to activate the YSU PBL scheme, users should replace the line <scheme>satmedmfvdifq</scheme> with <scheme>ysuvdif</scheme> and recompile the code.

Depending on the scheme, additional changes to the SDF (e.g., to add, remove, or change interstitial schemes) and to the namelist (to include scheme-specific tuning parameters) may be required. Users are encouraged to reach out on GitHub Discussions to find out more from subject matter experts about recommendations for the specific scheme they want to implement.

After making appropriate changes to the SDF and namelist files, users must ensure that they are using the same physics suite in their config.yaml file as the one they modified in FV3.input.yml. Then, run the generate_FV3LAM_wflow.py script to generate an experiment and navigate to the experiment directory. Users should see do_ysu = .true. in the namelist file (or similar, depending on the physics scheme selected), which indicates that the YSU PBL scheme is enabled.

You can change default parameters for a workflow task by setting them to a new value in the rocoto: tasks: section of the config.yaml file. First, be sure that the task you want to change is part of the default workflow or included under taskgroups: in the rocoto: tasks: section of config.yaml. For instructions on how to add a task to the workflow, see this FAQ.

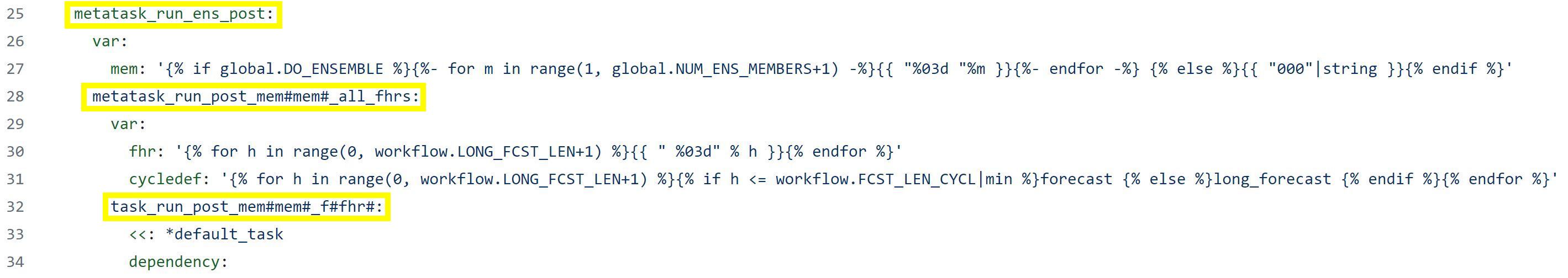

Once you verify that the task you want to modify is included in your workflow, you can configure the task by adding it to the rocoto: tasks: section of config.yaml. Users should refer to the YAML file where the task is defined to see how to structure the modifications (these YAML files reside in ufs-srweather-app/parm/wflow). For example, to change the wall clock time from 15 to 20 minutes for the run_post_mem###_f### tasks, users would look at post.yaml, where the post-processing tasks are defined. Formatting for tasks and metatasks should match the structure in this YAML file exactly.

Excerpt of post.yaml

Since the run_post_mem###_f### task in post.yaml comes under metatask_run_ens_post and metatask_run_post_mem#mem#_all_fhrs, all of these tasks and metatasks must be included under rocoto: tasks: before defining the walltime variable. Therefore, to change the walltime from 15 to 20 minutes, the rocoto: tasks: section should look like this:

rocoto: tasks:

metatask_run_ens_post:

metatask_run_post_mem#mem#_all_fhrs:

task_run_post_mem#mem#_f#fhr#:

walltime: 00:20:00

Notice that this section contains all three of the tasks/metatasks highlighted in yellow above and lists the walltime where the details of the task begin. While users may simply adjust the walltime variable in post.yaml, learning to make these changes in config.yaml allows for greater flexibility in experiment configuration. Users can modify a single file (config.yaml), rather than (potentially) several workflow YAML files, and can account for differences between experiments instead of hard-coding a single value.

See SRW Discussion #990 for the question that inspired this FAQ.

The predefined grids included with the SRW App are configured to run with 65 levels by default. However, advanced users may wish to vary the number of vertical levels in the grids they are using. The SRW App explains this process in the Limited Area Model Grid chapter of the documentation.

In general, there are two options for using more compute power: (1) increase the number of processing elements (PEs) or (2) enable more threads.

Increase Number of PEs

PEs are processing elements, which correspond to the number of message passing interface (MPI) processes/tasks. In the SRW App, PE_MEMBER01 is the number of MPI processes required by the forecast. It is generally calculated by OMP_NUM_THREADS_RUN_FCST * (LAYOUT_X * LAYOUT_Y + WRTCMP_write_groups * WRTCMP_write_tasks_per_group) when QUILTING is true. Since these variables are connected, it is recommended that users consider how many processors they want to use to run the forecast model and work backwards to determine the other values.

For simplicity, it is often best to set WRTCMP_write_groups to 1. It may be necessary to increase this number in cases where a single write group cannot finish writing its output before the model is ready to write again. This occurs when the model produces output at very short time intervals.

The WRTCMP_write_tasks_per_group value will depend on domain (i.e., grid) size. This means that a larger domain would require a higher value, while a smaller domain would likely require less than 5 tasks per group.

The LAYOUT_X and LAYOUT_Y variables are the number of MPI tasks to use in the horizontal x and y directions of the regional grid when running the forecast model. Note that the LAYOUT_X and LAYOUT_Y variables only affect the number of MPI tasks used to compute the forecast, not resolution of the grid. The larger these values are, the more work is involved when generating a forecast. That work can be spread out over more MPI processes to increase the speed, but this requires more computational resources. There is a limit where adding more MPI processes will no longer increase the speed at which the forecast completes, but the UFS scales well into the thousands of MPI processes.

Users can take a look at the SRW App predefined grids to get a better sense of what values to use for different types of grids. The Computational Parameters and Write Component Parameters sections of the SRW App User’s Guide define these variables.

Enable More Threads

In general, enabling more threads offers less increase in performance than doubling the number of PEs.However, it uses less memory and still improves performance. Threading is on by default in the SRW APP (OMP_NUM_THREADS_RUN_FCST is set to 2). To turn it off, set OMP_NUM_THREADS_RUN_FCST: 1, and to enable more threading, set OMP_NUM_THREADS_RUN_FCST to a higher number (e.g., 4). When increasing the value, it must be a factor of the number of cores/CPUs (the number of MPI tasks * Open Multi-Processing (OMP) threads cannot exceed the number of cores per node). Typically, it is best not to raise this value higher than 4 or 5 because there is a limit to the improvement possible via OpenMP parallelization (compared to MPI parallelization, which is significantly more efficient).

In almost every case, it is best to regenerate the experiment from scratch, even if most of the experiment ran successfully and the modification seems minor. Some variable checks are performed in the workflow generation step, while others are done at runtime. Some settings are changed based on the cycle, and some changes may be incompatible with the output of a previous task. At this time, there is no general way to partially rerun an experiment with different settings, so it is almost always better just to regenerate the experiment from scratch.

The exception to this rule is tasks that failed due to platform reasons (e.g., disk space, incorrect file paths). In these cases, users can refer to the FAQ on how to restart a DEAD task.

Users who are insistent on modifying and rerunning an experiment that fails for non-platform reasons would need to modify variables in config.yaml and var_defns.sh at a minimum. Modifications to rocoto_defns.yaml and FV3LAM_wflow.xml may also be necessary. However, even with modifications to all appropriate variables, the task may not run successfully due to task dependencies or other factors mentioned above. If there is a compelling need to make such changes in place (e.g., resource shortage for expensive experiments), users are encouraged to reach out via GitHub Discussions for advice.

See SRW Discussion #995 for the question that inspired this FAQ.

When users make changes to one of the SRW App executables, they can rerun the devbuild.sh script using the command ./devbuild.sh --platform=<machine_name>. This will eventually bring up three options: [R]emove, [C]ontinue, or [Q]uit.

The Continue option will recompile the modified routines and rebuild only the affected executables. The Remove option provides a clean build; it completely removes the existing build directory and rebuilds all executables from scratch instead of reusing the existing build where possible. The build log files for the CMake and Make step will appear in ufs-srweather-app/build/log.cmake and ufs-srweather-app/build/log.make; any errors encountered should be detailed in those files.

Users should note that the Continue option may not work as expected for changes to CCPP because the ccpp_prebuild.py script will not be rerun. It is typically best to recompile the model entirely in this case by selecting the Remove option for a clean build.

A convenience script, devclean.sh, is also available. This script can be used to remove build artifacts in cases where something goes wrong with the build or where changes have been made to the source code and the executables need to be rebuilt. Users can run this script by entering either ./devclean.sh --clean or ./devclean.sh -a. Following this step, they can rerun the devbuild.sh script to rebuild the SRW App. Running ./devclean.sh -h will list additional options available.

See SRW Discussion #1007 for the question that inspired this FAQ.

If you logged out before building the SRW App, you can restart your work from the step you left off on before logging out.

If you already built the SRW App, you can simply reload the conda environment and then pick up where you left off:

source /path/to/ufs-srweather-app/etc/lmod-setup.sh <platform>

module use /path/to/ufs-srweather-app/modulefiles

module load wflow_<platform>

conda activate srw_app

For example, from here, you can configure a new experiment (in config.yaml) or check on progress from an old experiment (e.g., using rocotostat or the tail command from within the experiment directory).

To configure a new experiment, users can simply modify variables in their existing ush/config.yaml file. When the user generates a new experiment by running ./generate_FV3LAM_wflow.py, the contents of their ush/config.yaml file are copied into the new experiment directory and used throughout the new experiment. Even though modifying ush/config.yaml overwrites the contents of that file, everything needed for a given experiment will be copied or linked to its experiment directory when generating an experiment. Presuming that the user either changes the EXPT_SUBDIR name or keeps the default PREEXISTING_DIR_METHOD: rename setting, details of any previous configurations can be referenced by checking the config.yaml copy in a previous experiments’ experiment directory.

The ufs-srweather-app repository contains a devclean.sh convenience script. This script can be used to clean up code if something goes wrong when checking out externals or building the application. To view usage instructions and to get help, run with the -h or --help flag:

./devclean.sh -h

To remove all the build artifacts and directories except conda installation, use the -b or --build flag:

./devclean.sh –build

When using a containerized approach of running the SRW, use the --container option that will make sure to remove container-bin directory in lieu of the exec, i.e.:

./devclean.sh –container

To remove only conda directory and conda_loc file in the main SRW directory, run with the -c or --conda flag:

./devclean.sh –conda

OR

./devclean.sh -c

To remove external submodules, run with the -s or --sub-modules flag:

./devclean.sh –sub-modules

To remove all build artifacts, conda and submodules (equivalent to `-b -c -s`), run with the -a or --all flag:

./devclean.sh –all

Users will need to check out the external submodules again before building the application.

The name of the experiment is set in the workflow: section of the config.yaml file using the variable EXPT_SUBDIR. See Section 2.4.3.2.2 and/or Section 3.1.3.2 of the User’s Guide for more details.

The SDF is set in the workflow: section of the config.yaml file using the variable CCPP_PHYS_SUITE. The five supported physics suites for the SRW Application are:

FV3_GFS_v16

RRFS_sas

FV3_HRRR

FV3_WoFS_v0

FV3_RAP

When users run the generate_FV3LAM_wflow.py script, the SDF file is copied from its location in the forecast model directory to the experiment directory $EXPTDIR. For more information on the CCPP physics suite parameters, see Section 3.1.3.8.

To change the predefined grid, modify the PREDEF_GRID_NAME variable in the task_run_fcst: section of the config.yaml script (see Section 2.4.3.2.2 of the User’s Guide for details on creating and modifying the config.yaml file). The five supported predefined grids as of the SRW Application v3.0.0 release are:

RRFS_CONUS_3km

RRFS_CONUS_13km

RRFS_CONUS_25km

SUBCONUS_Ind_3km

RRFS_NA_13km

However, users can choose from a variety of predefined grids listed in Section 3.1.3.11 of the User’s Guide. An option also exists to create a user-defined grid, with information available in Section 3.3.2. However, the user-defined grid option is not fully supported as of the v3.0.0 release and is provided for informational purposes only.

Section 2.4.3.2.2.2 of the User’s Guide provides a full description of how to turn on/off workflow tasks.

The default workflow tasks are defined in ufs-srweather-app/parm/wflow/default_workflow.yaml. However, the /parm/wflow directory contains several YAML files that configure different workflow task groups. Each file contains a number of tasks that are typically run together (see Table 2.7 for a description of each task group). To add or remove workflow tasks, users will need to alter the user configuration file (config.yaml) as described in Section 2.4.3.2.2.2 to override the default workflow and run the selected tasks and task groups.

In general, there are two options for using more compute power: (1) increase the number of PEs or (2) enable more threads.

Increase Number of PEs

PEs are processing elements, which correspond to the number of MPI processes/tasks. In the SRW App, PE_MEMBER01 is the number of MPI processes required by the forecast. It is calculated by:

OMP_NUM_THREADS_RUN_FCST * (LAYOUT_X * LAYOUT_Y + WRTCMP_write_groups * WRTCMP_write_tasks_per_group) + FIRE_NUM_TASKS when QUILTING is true. Since these variables are connected, it is recommended that users consider how many processors they want to use to run the forecast model and work backwards to determine the other values.

For simplicity, it is often best to set WRTCMP_write_groups to 1. It may be necessary to increase this number in cases where a single write group cannot finish writing its output before the model is ready to write again. This occurs when the model produces output at very short time intervals.

The WRTCMP_write_tasks_per_group value will depend on domain (i.e., grid) size. This means that a larger domain would require a higher value, while a smaller domain would likely require less than 5 tasks per group.

The OMP_NUM_THREADS_RUN_FCST is the number of OpenMP threads to use for parallel regions, LAYOUT_X and LAYOUT_Y variables are the number of MPI tasks to use in the horizontal x and y directions of the regional grid when running the forecast model, and FIRE_NUM_TASKS is the number of MPI tasks assigned to the FIRE_BEHAVIOR component. Note that the LAYOUT_X and LAYOUT_Y variables only affect the number of MPI tasks used to compute the forecast, not resolution of the grid. The larger these values are, the more work is involved when generating a forecast. That work can be spread out over more MPI processes to increase the speed, but this requires more computational resources. There is a limit where adding more MPI processes will no longer increase the speed at which the forecast completes, but the UFS scales well into the thousands of MPI processes.

Users can take a look at the SRW App predefined grids to get a better sense of what values to use for different types of grids. The Computational Parameters and Write Component Parameters sections of the SRW App User’s Guide define these variables.

Enable More Threads

In general, enabling more threads offers less increase in performance than doubling the number of PEs. However, it uses less memory and still improves performance. To enable more threading, set OMP_NUM_THREADS_RUN_FCST to a higher number (e.g., 2 or 4). When increasing the value, it must be a factor of the number of cores/CPUs (number of MPI tasks * OMP threads cannot exceed the number of cores per node). Typically, it is best not to raise this value higher than 4 or 5 because there is a limit to the improvement possible via OpenMP parallelization (compared to MPI parallelization, which is significantly more efficient).

The first three pre-processing tasks make_grid, make_orog, and make_sfc_climo are cycle-independent, meaning that they only need to be run once per experiment. By default, the the workflow will run these tasks. However, if the grid, orography, and surface climatology files that these tasks generate are already available (e.g., from a previous experiment that used the same grid as the current experiment), then these tasks can be skipped, and the workflow can use those pre-generated files.

To skip these tasks, remove parm/wflow/prep.yaml from the list of task groups in the Rocoto section of the configuration file (config.yaml):

taskgroups:

– parm/wflow/coldstart.yaml

– parm/wflow/post.yaml

Then, add the appropriate tasks and paths to the previously generated grid, orography, and surface climatology files to config.yaml:

task_make_grid:

GRID_DIR: /path/to/directory/containing/grid/files

task_make_orog:

OROG_DIR: /path/to/directory/containing/orography/files

task_make_sfc_climo:

SFC_CLIMO_DIR: /path/to/directory/containing/surface/climatology/files

All three sets of files may be placed in the same directory location (and would therefore have the same path), but they can also reside in different directories and use different paths.

You can change default parameters for a workflow task by setting them to a new value in the rocoto: tasks: section of the config.yaml file. First, be sure that the task you want to change is part of the default workflow or included under taskgroups: in the rocoto: tasks: section of config.yaml. For instructions on how to add a task to the workflow, see this FAQ.

Once you verify that the task you want to modify is included in your workflow, you can configure the task by adding it to the rocoto: tasks: section of config.yaml. Users should refer to the YAML file where the task is defined to see how to structure the modifications (these YAML files reside in ufs-srweather-app/parm/wflow). For example, to change the wall clock time from 15 to 20 minutes for the run_post_mem###_f### tasks, users would look at post.yaml, where the post-processing tasks are defined. Formatting for tasks and metatasks should match the structure in this YAML file exactly.

Fig. Excerpt of post.yaml

Since the run_post_mem###_f### task in post.yaml comes under metatask_run_ens_post and metatask_run_post_mem#mem#_all_fhrs, all of these tasks and metatasks must be included under rocoto: tasks: before defining the walltime variable. Therefore, to change the walltime from 15 to 20 minutes, the rocoto: tasks: section should look like this:

task_run_post:

upp:

execution:

batchargs:

walltime: 00:20:00

Notice that this section contains all three of the tasks/metatasks highlighted in yellow above and lists the walltime where the details of the task begin. While users may simply adjust the walltime variable in post.yaml, learning to make these changes in config.yaml allows for greater flexibility in experiment configuration. Users can modify a single file (config.yaml), rather than (potentially) several workflow YAML files, and can account for differences between experiments instead of hard-coding a single value.

See SRW Discussion #990 for the question that inspired this FAQ.

When users make changes to one of the SRW App executables, they can rerun the devbuild.sh script using the command ./devbuild.sh --platform=<machine_name>. This will eventually bring up three options: [R]emove, [C]ontinue, or [Q]uit.

The Continue option will recompile the modified routines and rebuild only the affected executables. The Remove option provides a clean build; it completely removes the existing build directory and rebuilds all executables from scratch instead of reusing the existing build where possible. The build log files for the CMake and Make step will appear in ufs-srweather-app/build/log.cmake and ufs-srweather-app/build/log.make; any errors encountered should be detailed in those files.

Users should note that the Continue option may not work as expected for changes to CCPP because the ccpp_prebuild.py script will not be rerun. It is typically best to recompile the model entirely in this case by selecting the Remove option for a clean build.

A convenience script, devclean.sh, is also available. This script can be used to remove build artifacts in cases where something goes wrong with the build or where changes have been made to the source code and the executables need to be rebuilt. Users can run this script by entering ./devclean.sh -a. Following this step, they can rerun the devbuild.sh script to rebuild the SRW App. Running ./devclean.sh -h will list additional options available.

See SRW Discussion #1007 for the question that inspired this FAQ.

At this time, there are ten physics suites available in the SRW App, five of which are fully supported. However, several additional physics schemes are available in the UFS Weather Model (WM) and can be enabled in the SRW App. The CCPP Scientific Documentation details the various namelist options available in the UFS WM, including physics schemes, and also includes an overview of schemes and suites.

! Attention

Note that when users enable new physics schemes in the SRW App, they are using untested and unverified combinations of physics, which can lead to unexpected and/or poor results. It is recommended that users run experiments only with the supported physics suites and physics schemes unless they have an excellent understanding of how these physics schemes work and a specific research purpose in mind for making such changes.

To enable an additional physics scheme, such as the YSU PBL scheme, users may need to modify ufs-srweather-app/parm/FV3.input.yml. This is necessary when the namelist has a logical variable corresponding to the desired physics scheme. In this case, it should be set to True for the physics scheme they would like to use (e.g., do_ysu = True).

It may be necessary to disable another physics scheme, too. For example, when using the YSU PBL scheme, users should disable the default SATMEDMF PBL scheme (satmedmfvdifq) by setting the satmedmf variable to False in the FV3.input.yml file.

It may also be necessary to add or subtract interstitial schemes, so that the communication between schemes and between schemes and the host model is in order. For example, it is necessary that the connections between clouds and radiation are correctly established.

Regardless, users will need to modify the suite definition file (SDF) and recompile the code. For example, to activate the YSU PBL scheme, users should replace the line <scheme>satmedmfvdifq</scheme> with <scheme>ysuvdif</scheme> and recompile the code.

Depending on the scheme, additional changes to the SDF (e.g., to add, remove, or change interstitial schemes) and to the namelist (to include scheme-specific tuning parameters) may be required. Users are encouraged to reach out on GitHub Discussions to find out more from subject matter experts about recommendations for the specific scheme they want to implement. Users can post on the SRW App Discussions page or ask their questions directly to the developers of ccpp-physics and ccpp-framework, which also handle support through GitHub Discussions.

After making appropriate changes to the SDF and namelist files, users must ensure that they are using the same physics suite in their config.yaml file as the one they modified in FV3.input.yml. Then, the user can run the generate_FV3LAM_wflow.py script to generate an experiment and navigate to the experiment directory. They should see do_ysu = .true. in the namelist file (or a similar statement, depending on the physics scheme selected), which indicates that the YSU PBL scheme is enabled.

On platforms that utilize Rocoto workflow software (such as NCAR’s Derecho machine), if something goes wrong with the workflow, a task may end up in the DEAD state:

CYCLE TASK JOBID STATE EXIT STATUS TRIES DURATION ================================================================================= 201906151800 make_grid 9443237 QUEUED - 0 0.0 201906151800 make_orog - - - - - 201906151800 make_sfc_climo - - - - - 201906151800 get_extrn_ics 9443293 DEAD 256 3 5.0

This means that the dead task has not completed successfully, so the workflow has stopped. Once the issue has been identified and fixed (by referencing the log files in $EXPTDIR/log), users can re-run the failed task using the rocotorewind command:

rocotorewind -w FV3LAM_wflow.xml -d FV3LAM_wflow.db -v 10 -c 201906151800 -t get_extrn_ics

where -c specifies the cycle date (first column of rocotostat output) and -t represents the task name (second column of rocotostat output). After using rocotorewind, the next time rocotorun is used to advance the workflow, the job will be resubmitted.

To run a new experiment at a later time, users need to rerun the commands in Section 2.4.3.1 that reactivate the srw_app environment:

source /path/to/ufs-srweather-app/etc/lmod-setup.sh <platform> module use /path/to/ufs-srweather-app/modulefiles module load wflow_<platform>

where <platform> is a valid, lowercased machine name (see MACHINE in Section 3.1.1 for valid values), and /path/to/ is replaced by the actual path to the ufs-srweather-app.

Follow any instructions output by the console (e.g., conda activate srw_app).

Then, users can configure a new experiment by updating the experiment parameters in config.yaml to reflect the desired experiment configuration. Detailed instructions can be viewed in Section 2.4.3.2.2. Parameters and valid values are listed in Section 3.1. After adjusting the configuration file, generate the new experiment by running ./generate_FV3LAM_wflow.py. Check progress by navigating to the $EXPTDIR and running rocotostat -w FV3LAM_wflow.xml -d FV3LAM_wflow.db -v 10.

! Note

If users have updated their clone of the SRW App (e.g., via

git pullorgit fetch/git merge) since running their last experiment, and the updates include a change toExternals.cfg, users will need to reruncheckout_externals(instructions here) and rebuild the SRW App according to the instructions in Section 2.3.4.

If you encounter issues while generating ICS and LBCS for a predefined 3-km grid using the UFS SRW App, there are a number of troubleshooting options. The first step is always to check the log file for a failed task. This file will provide information on what went wrong. A log file for each task appears in the log subdirectory of the experiment directory (e.g., $EXPTDIR/log/make_ics).

Additionally, users can try increasing the number of processors or the wallclock time requested for the jobs. Sometimes jobs may fail without errors because the process is cut short. These settings can be adusted in one of the ufs-srweather-app/parm/wflow files. For ICs/LBCs tasks, these parameters are set in the coldstart.yaml file.

Users can also update the hash of UFS_UTILS in the Externals.cfg file to the HEAD of that repository. There was a known memory issue with how chgres_cube was handling regridding of the 3-D wind field for large domains at high resolutions (see UFS_UTILS PR #766 and the associated issue for more information). If changing the hash in Externals.cfg, users will need to rerun manage_externals and rebuild the code (see Section 2.3).

In order for model layer reflectivity to be calculated, the lradar FV3 input variable needs to be set to True. For the CCPP physics suites, FV3_GFS_v15p2 and FV3_GFS_v16, the lradar variable is set to false by default. In the SRW App, this variable is being set to null for these two physics suites, which causes the CCPP default of to be used. Thus, model layer reflectivity is not being calculated and the composite reflectivity will not be plotted. Users can override this by setting lradar to true in the parm/FV3.input.yml file in the SRW App which generates radar-style composite reflectivity plots from the maximum reflectivity at each model layer.

Update line #508 of ufs-srweather-app/parm/FV3.input.yml.

lradar: True

Users should try reducing the number of MPI tasks allocated to the make_ics and make_lbcs tasks. Add the following section to the model configuration file, config.yaml

rocoto:

tasks:

metatask_run_ensemble:

task_make_ics_mem#mem#:

nnodes: 1

ppn: 12

task_make_lbcs_mem#mem#:

nnodes: 1

ppn: 12If the issue persists, try increasing the grid size by a bit and reducing the tasks per node, ppn further.

Unified Post Processor (UPP) Questions

Users can find answers to many frequently asked UPP questions by referencing the UPP User’s Guide. Additional questions are included below.

The UPP is compatible with NetCDF4 when used on UFS model output.

We are not able to support all platform and compiler combinations out there but will try to help with specific issues when able. Users may request support on the UPP GitHub Discussions page. We always welcome and are grateful for user-contributed configurations.

Currently, the stand-alone release of the UPP can be utilized to output satellite fields if desired. The UPP documentation lists the grib2 fields, including satellite fields, produced by the UPP. After selecting which fields to output, the user must adjust the control file according to the instructions in the UPP documentation to output the desired fields. When outputting satellite products, users should note that not all physics options are supported for outputting satellite products. Additionally, for regional runs, users must ensure that the satellite field of view overlaps some part of their domain.

Most UFS application releases do not currently support this capability, although it is available in the Short-Range Weather (SRW) Application. This SRW App pull request (PR) added the option for users to output satellite fields using the SRW App. The capability is documented in the SRW App User’s Guide.

If the desired variable is already available in the UPP code, then the user can simply add that variable to the postcntrl.xml file and remake the postxconfig-NT.txt file that the UPP reads. Please note that some variables may be dependent on the model and/or physics used.

If the desired variable is not already available in the UPP code, it can be added following the instructions for adding a new variable in the UPP User’s Guide.

There are a few possible reasons why a requested variable might not appear in the UPP output:

- The variable may be dependent on the model.

- Certain variables are dependent on the model configuration. For example, if a variable depends on a particular physics suite, it may not appear in the output when a different physics suite is used.

- The requested variable may depend on output from a different field that was not included in the model output.

If the user suspects that the UPP failed (e.g., no UPP output was produced or console output includes an error message like mv: cannot stat `GFSPRS.GrbF00`: No such file or directory), the best way to diagnose the issue is to consult the UPP runtime log file for errors. When using the standalone UPP with the run_upp script, this log file will be located in the postprd directory under the name upp.fHHH.out, where HHH refers to the 3-digit forecast hour being processed. When the UPP is used with the SRW App, the UPP log files can be found in the experiment directory under log/run_post_fHHH.log.

UPP output is in standard grib2 format and can be interpolated to another grid using the third-party utility wgrib2. Some basic examples can also be found in the UPP User’s Guide.

This may be a memory issue; try increasing the number of central processing units (CPUs) or spreading them out across nodes (e.g., increase ptiles). We also know of one version of message passing interface (MPI) (mpich v3.0.4) that does not work with UPP. A work-around was found by modifying the UPP/sorc/ncep_post.fd/WRFPOST.f routine to change all unit 5 references (which is standard input/output (I/O)) to unit 4 instead.

For re-gridding grib2 unipost output, the wgrib2 utility can be used. See complete documentation on grid specification with examples of re-gridding for all available grid definitions. The Regridding section of the UPP User’s Guide also gives examples (including an example from operations) of using wgrib2 to interpolate to various common grids.

This warning appears for some platforms/compilers because a call in the nemsio library is never used or referenced for a serial build. This is just a warning and should not hinder a successful build of UPP or negatively impact your UPP run.

This error message is displayed when using more recent versions of the wgrib2 utility on files for forecast hour zero that contain accumulated or time-averaged fields. This is due to the newer versions of wgrib2 no longer allowing for the n parameter to be zero or empty.

Users should consider using a separate control file (e.g., postcntrl_gfs_f00.xml) for forecast hour zero that does not include accumulated or time-averaged fields, since they are zero anyway. Users can also continue to use an older version of wgrib2; v2.0.4 is the latest known version that does not result in this error.

Stochastic Physics

The stochastic physics unit tests are currently only enabled to run on Hera, so users must be logged into that machine first. After cloning the stochastic_physics repository, navigate to the unit_tests directory and run the run_standalone.sh script, which will submit the unit tests to Slurm as a batch job:

sbatch run_standalone.sh

The standalone_stochy.x executable will then be generated using GNU compilers, and when executed within the same shell script, six output data tiles are created in the newly generated stochy_out directory. Output tiles will be named workg_T82_504x248.tile0#.nc, where # refers to tiles numbered 1-6.

Stochastic physics itself does not include a main operational feature. Instead, it adds valuable scientific and numerical impact addressing dynamical effects of unresolved scales in the UFS Weather Model forecast system. Stochastic physics unit tests verify the functionality of each stochastic scheme. Currently, stochastic schemes are used operationally at NCEP/EMC: Stochastic Kinetic Energy Backscatter (SKEB; Berner et al., 2009), Stochastically Perturbed Physics (SPP; Palmer et al., 2009), Stochastically Perturbed Physics Tendencies (SPPT; Palmer et al., 2009), and Specific Humidity perturbations (SHUM, Tompkins and Berner, 2008). In addition, there are the capabilities to perturb certain land model/surface parameters (Gehne et al., 2019 and Draper 2021), and a cellular automata scheme (Bengtsson et al. 2013), which interacts directly with the convective parameterization.

SPPT, SKEB, and SHUM are what is known as “ad-hoc” schemes, in that their perturbations are prescribed based on a white-noise perturbation field and added to parameter tendencies or state fields to achieve ensemble spread. SPP represents a more targeted stochastics physics method, where parameters within physics schemes are directly perturbed based on a perturbation field that is coherent in time and space. SPP achieves adequate spread through the perturbation of many parameters across multiple physics schemes. The land model/surface parameter perturbations are essentially an implementation of SPP within the land-surface model physics parameterization. The cellular automata scheme applies a stochastically-perturbed organization of sub- and cross-grid convection within the cumulus scheme of a given model. More detailed information on these stochastic physics schemes can be found here.

The Global Ensemble Forecast System (GEFS) uses SPPT, SKEB, and SHUM. The soon-to-be-implemented Rapid-Refresh Forecast System (RRFS) will use SPP and possibly SPPT.